The challenges of AI implementation

Even though artificial intelligence is developing and gaining more popularity in both business and society, the subject still faces significant hurdles. There are many challenges that must be overcome before AI (implementation) is able to achieve maximum potential. A lot of industries consider the notion that overcoming these challenges is vital for the advancement of the tech industry and society all together because of the power AI presents.

Artificial Intelligence is a technology that can revolutionise many things such as healthcare, the manufacturing industry, space exploration, countering terrorism, creating art such as paintings and music, and even write award-winning movie scripts. With the interest in AI exploding in the last few years, companies have started investing significant capital into the research and development of AI applications such as autonomous cars, robots, etc.

In this article, we’re going to guide you through some of the most common challenges of AI implementation that arise with the, alongside how an organisation or company can be prepared to deal with them.

The challenges of AI implementation

Computing is not fast enough

AI is, essentially, an immense series of mathematical problems that learn to correct their own mistakes. In the case of image recognition, a computer must perform millions of math problems (or calculations) per second for a system to learn and recognise the patterns that are processed through it. This technique of machine learning requires a level of brute-force computational power that simply wasn’t available until recent years.

Machine Learning and Deep Learning techniques require a series of highly sophisticated calculations to be made very quickly; in microseconds or even nanoseconds. Artificial intelligence utilises a lot of processing power and finally technology has become powerful enough to enable the future of AI.

If we look at the latest chips from Intel, they can run over 10 trillion calculations per second. This is a massive improvement to what we had only a decade ago. Modern chips have other capabilities that make them perfect for artificial intelligence development. Many AI mathematical problems have similar structures and approaches, which means that chips can be optimised to carry out those calculations more quickly.

Another problem that these chips address is the ability to reduce the amount of time it takes for data retrieval. Every time a traditional chip has to retrieve a bit of data from memory, the processing time is slowed down. This is why newer chips have memory installed into the processor itself so there’s less need to transfer data back and forth between the processor and the memory device. These kind of chips are ideal for the development of image recognition software, deep learning on unstructured data, and a variety of other artificial intelligence jobs.

Cloud computing and parallel processing systems have been able to provide an alternative solution in the short term. However, as the total amount of data in the world continues to grow, and deep learning driving the automated creation of increasingly complicated algorithms, the bottleneck will continue to slow down development progress.

Quantum computing, a concept that is still in development, is likely to be the next solution for the problem of computing power. Quantum computers differ from traditional computers because they perform calculations based on the probability of an object’s state before it is measured, instead of just a binary (1s and 0s) system. This means they have the potential to process exponentially more data compared to the traditional computer. However, it will be at least ten more years before quantum computing sees its way to commercial use.

Data Privacy and Security

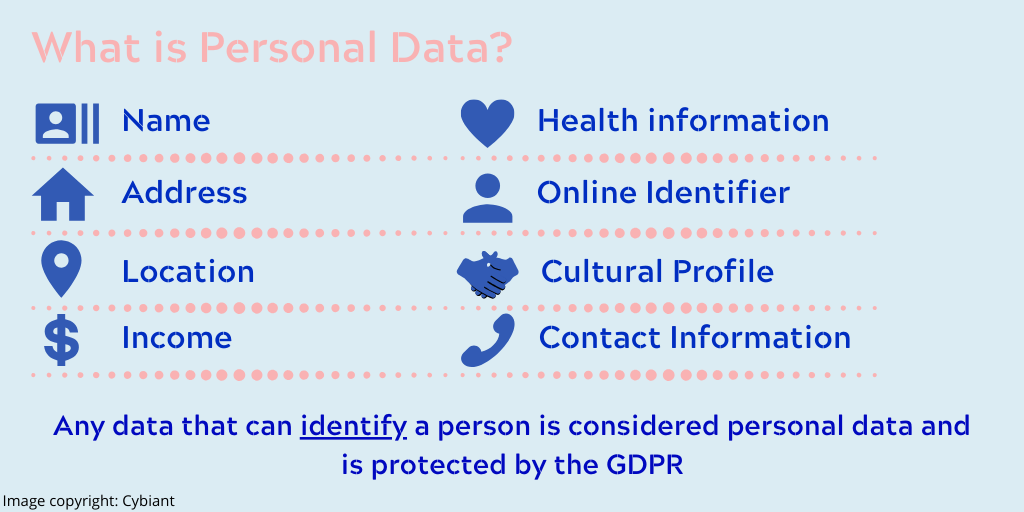

Another challenge of AI implementation is that Artificial intelligence uses massive volumes of data to learn and make intelligent decisions. Machine learning and deep learning systems depend on the data which means that they have access to potentially sensitive data that might not have been otherwise authorised to be used. These systems learn from data and improve on their own mistakes to become more efficient with their tasks. In order to improve and protect consumers and the general public, the European Union has implemented the General Data Protection Regulation (GDPR) that aims to guarantee the complete protection of personal data. However, it can be difficult to enforce this regulation because it is often the case that companies are storing their data in warehouses that are in foreign countries, which adds an extra layer of legal complication.

Figure 1: Things that are considered personal data

Speed Of Communication

Although we currently have seemingly very fast network speeds, it isn’t fast enough to introduce complex AI applications such as remote driving of vehicles. Current 3G and 4G networks often encounter lag issues, disconnects and delays. It wouldn’t be reliable enough to deploy technology such as driving trucks remotely as driving requires extremely fast reaction times and a delay in communication to the truck could have fatal consequences in extreme situations.

The introduction of fibre-optic cables and 3G and 4G networks enabled exponentially larger quantities of data to fly back and forth at quick speeds. All of the video streaming services we have today would not be possible without these. The many devices we rely on a daily basis such as mobile phones and laptops would still be only in their simple iterations with basic functionality. Simply put, it was these advancements in communication technology alone with cloud computing that enabled the blockchain and IoT economy. Fifth generation wireless networks (5G) promises to be the next great improvement for many industries, especially for AI.

According to the IDC, we have collectively created nine times more data than we did between the start of civilisation and the year 2015. This data is what drives artificial intelligence development. Figuratively speaking, oil cannot power cars if it is in the ground, and if data can’t move to where it’s needed, it’s useless. This is not a trivial problem and was one of the key roadblocks to the growth of AI.

Bias

One of the most widely discussed challenges of AI implementation is bias. Bias has always been a consistently occurring phenomenon in human nature. It is the result of the necessarily limited view of the world that any single person or group can achieve. One of the biggest ethical issues of AI is that social bias can be reflected and amplified by artificial intelligence in dangerous ways. AI systems cannot be “prejudiced” against people of a certain ethnic group or women or come up with their own specifications of how to make decisions. AI systems are programmed to make decisions based on data they are fed. They do not have opinions but they can learn from the opinions of others, and that’s where bias happens.

Bias presents an issue because their level of “good” or “bad” depends on the much data they are trained on and how the programmer defines what is good or bad. Bad data is often associated with, ethnic, communal, gender or racial biases. For example, as the investigative news site ProPublica has found, a criminal justice algorithm used in Broward Country, Florida, mislabeled African-American defendants as “high risk” at nearly twice the rate it mislabeled white defendants. Amazon stopped using a hiring algorithm after finding it favoured applicants based on words like “executed” or “captured” that were more commonly found on men’s resumes.

Hypothetically speaking, if a hidden bias in the algorithms which takes crucial decisions goes unrecognised, it could lead to unethical and unfair results, and possibly cause a number of issues once discovery is made. In the future, biases will be more prominent as many AI systems will continue to be trained on bad data. This is why it’s important for organisations that work on AI to train systems with unbiased data and create fair, easily-explained algorithms.

Support from Executive Leadership

Support from the executive leadership should be spread across the organisation at all levels to adopt artificial intelligence into the company’s business model. Encouraging AI adoption promotes organisational and cultural change which is vital for a the growth of an organisation. For example, success is not likely in a company where the CMO funds a project while marketing managers are hesitant to share their expertise or data. The fact is, artificial intelligence will soon be able to do the administrative tasks that consume much of managers’ time faster, better, and at a lower cost.

It should be noted that AI adoption is very complicated and it would be safe to assume most organisations that want to adopt AI don’t know where to start. Identifying business cases for AI applications requires managers to have a solid understanding of current AI technologies and their limitations. It is hard for managers to identify the areas they need to work with AI vendors and identify leading vendors. Leaders also often underestimate AI requirements. While advanced, modern technology and talent are certainly needed, it’s also equally important to align a company’s culture, structure, and ways of working to support the integration of artificial intelligence.

Conclusion

After this article we hoped you gained a greater understanding of the challenges of AI implementation. AI will ultimately prove to be cheaper, more efficient, and potentially more impartial in its actions than human beings. With standards set for data training, we can avoid bias and create AI systems that are fair and helpful for everyone. AI will bring new criteria for success in organisations as well: collaboration capabilities, information sharing, experimentation, learning and decision-making effectiveness, and the ability to reach beyond limits.